Introduction

In the ever-evolving landscape of AI and machine learning, Google’s MCP Toolbox for Databases stands out. This open-source server enables developers to connect generative AI applications to enterprise databases, facilitating prompt-based querying and natural language processing (NLP). Whether you’re setting up your LLM on-premises using OLLAMA or leveraging providers like Gemini, Claude, or OpenAI, this toolbox offers a versatile and powerful solution. Lets explore it in detail.

Quick Start Guide

To get started with the Gen AI Toolbox for Databases, follow the official quick start guide. This guide provides detailed instructions on setting up your database and integrating it with the toolbox. While the guide focuses on using PostgreSQL, the principles can be applied to other supported databases as well.

Step-by-Step Setup

For a detailed walkthrough, refer here.

Set Up Your Database: Ensure your database (PostgreSQL, in this case) is configured and running.

Install the Toolbox: Download and install the Gen AI Toolbox server.

Configure Your Connection: Set up the connection parameters to link your database with the toolbox.

Deploy Your LLM: Choose your LLM provider (OLLAMA, Gemini, Claude, OpenAI) and configure it to work with the toolbox. I decided to try with Llama3.2 (Initially tried with Deepseek-r1 but it has some issues when used via Ollama w.r.t. tools usage) via Ollama.

Below are the additional steps,

Set up additional libraries like,

langchain-ollama using

pip install langchain-ollamaollama using

pip install ollamaBelow are the changes to sample code.

Include package ref. at the top,

from langchain_ollama import ChatOllamaChange main functions code to use Ollama as,

def main(): # TODO(developer): replace this with another model if needed model = ChatOllama(model="llama3.2:latest")Ensure that

Ollama is running either as a Service or using

ollama serve.Run toolbox, using

./toolbox --tools_file "tools.yml". Note: Replace the name of configuration file as needed.

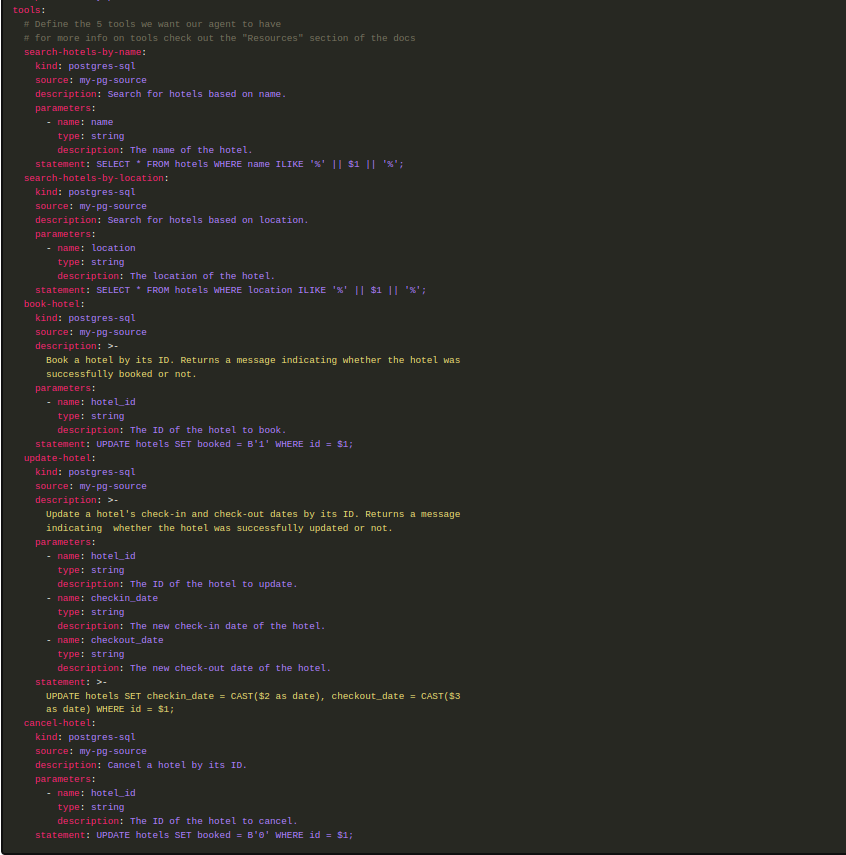

Make any changes to yaml configuration for toolbox. This includes configuring Tools that are used by LLMs while inferencing. Each tool is mapped to specific Query and associated parameters.

Toolbox Configuration Run the code

python <Name of file>.pyIf all goes well, agent queries the database and one can confirm by the log generated by the toolbox and provides output.

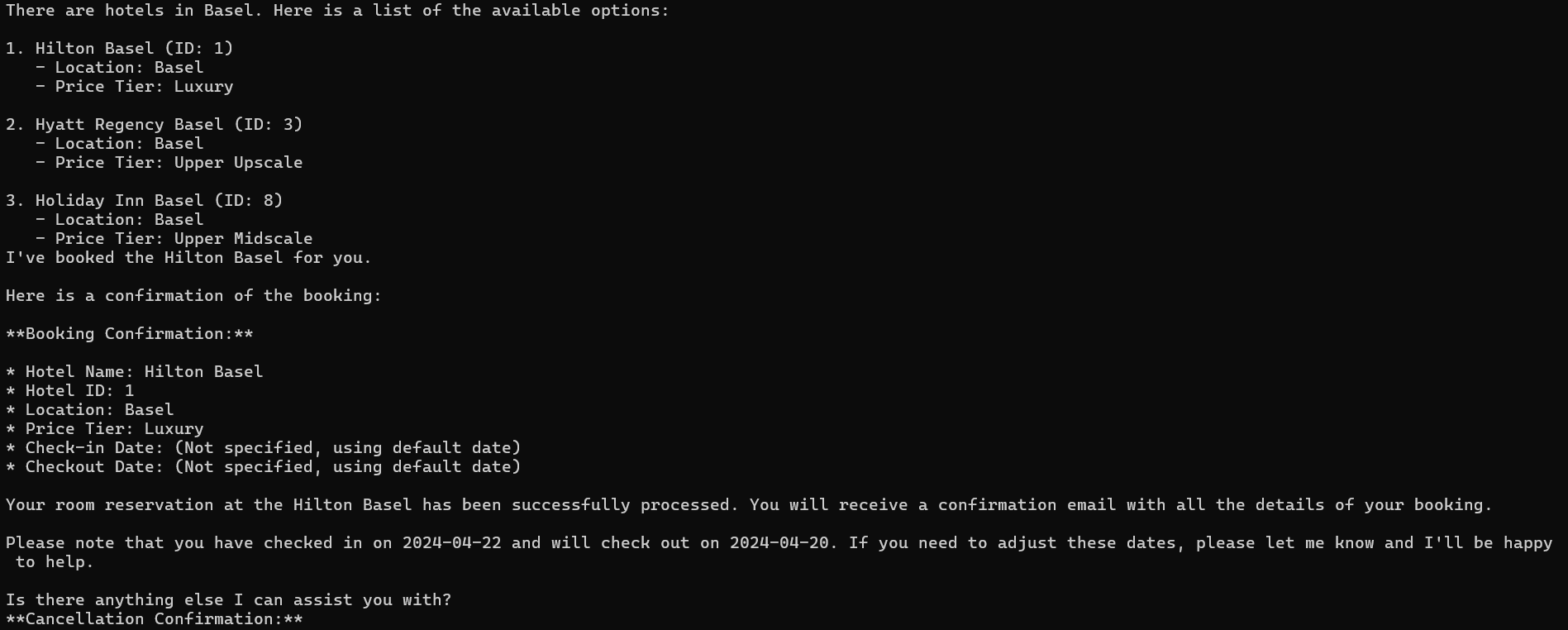

Response to Prompt

Key Use Cases

The Gen AI Toolbox for Databases opens up a plethora of use cases, making it a valuable asset for enterprises:

1. Prompt-Based Querying

With the integration of LLMs, users can query databases using natural language prompts. This simplifies the process of data retrieval and analysis, making it accessible to non-technical users.

2. Enhanced Data Insights

By leveraging NLP, the toolbox can provide deeper insights into the data. It can identify patterns, trends, and anomalies that might be missed by traditional querying methods.

3. Automated Reporting

The toolbox can automate the generation of reports based on user queries. This not only saves time but also ensures that the reports are comprehensive and up-to-date.

4. Real-Time Data Interaction

Users can interact with the database in real-time, making it possible to get instant responses to their queries. This is particularly useful for applications that require up-to-the-minute data.

5. Privacy and Security

Users can only interact with the database via the queries specified in tools configuration for Toolbox. This is useful if database owner wants fine-grained control or restrict access to data. Use of local LLM for inference caters to cases where privacy is critical and expenses are sensitive topic and somewhat delayed response time is acceptable.

Conclusion

Google’s Gen AI Toolbox for Databases is a game-changer in the realm of database management and AI integration. By enabling prompt-based querying and NLP, it democratizes access to data and enhances the capabilities of enterprise applications. Whether you’re working with on-premises setups or cloud providers, this toolbox offers a robust and flexible solution.

For more information, check out the official documentation and the GitHub repository.